Test phase primarily focuses on validation and verification of software being developed.

"Validation refers to the assurance that a product, service, or system meets the needs of the customer and other identified stakeholders. It often involves acceptance and suitability with external customers."

"Verification refers to the evaluation of whether or not a product, service, or system complies with a regulation, requirement, specification, or imposed condition. It is often an internal process."

The software shall be tested on the following areas:

| Tests | Descriptions |

| Functional | Tests that are conducted to verify whether functions’ specifications and system are able to meet business or operational requirements. This involves feeding the input and examining whether it is the expected output of the system. Refer to section 2.7.2. |

| Performance | Tests that are conducted to identify the areas for improvement of the systems such as resource bottleneck issue, server configuration issue, application configuration issue, long running application logic and errors and exceptions. Refer to section 2.7.3. |

| Security | Tests that are conducted to uncover any vulnerabilities found on the system and if the data is secured from unauthorized access. Refer to section 2.7.4. |

2.7.1 Test Planning, Design and Reporting

Objective: Tests are being done to ensure that the application is roll out with minimal risks on operational or performance failures

Key areas for testing:

- Functional

- Performance

- Security

Approach:

Figure 2.7.1 Test Planning, Design and Reporting

2.7.2 Functional Testing

Functional testing is a type of software testing whereby the system is tested against the functional requirements/specifications. Functions (or features) are tested by feeding them input and examining the output. Functional testing ensures that the requirements are properly satisfied by the application.

Test Type: Blackbox testing

Objective: To verify functions’ specifications and systems are able to meet business or operational requirements. This test shall be conducted by software test team and system users.

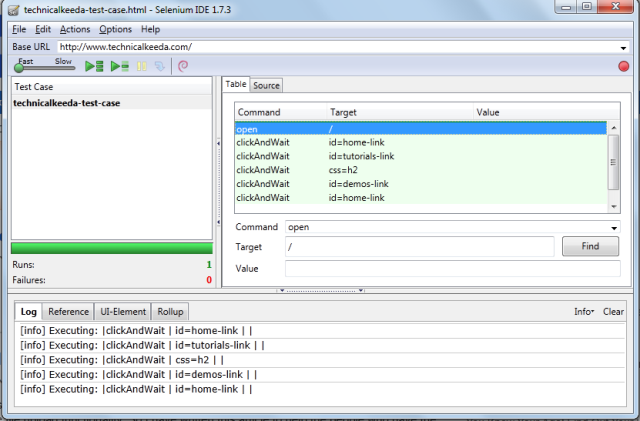

Test Methodology:

1) Identify the key functions for each module for testing

2) Create the dummy input data based on the functionalities

- Expected input data - Data is used to verify if the functions are giving expected output

- Unexpected input data - Data is used to verify if system is able to handle unexpected errors

3) Define the Acceptance Criteria for Output with Users

- Acceptance criteria for output shall be jointly defined by business, application team, developers and system users

4) Test Execution

- Tests shall be executed by system users and focus groups

- Test scripts that being used by system users on key functionalities shall be scripted into automated functional tests for regression testing

5) Result analysis on both input and output to verify functions’ specifications are met and unexpected conditions are being handled

6) System verification with users based on business or operational requirements

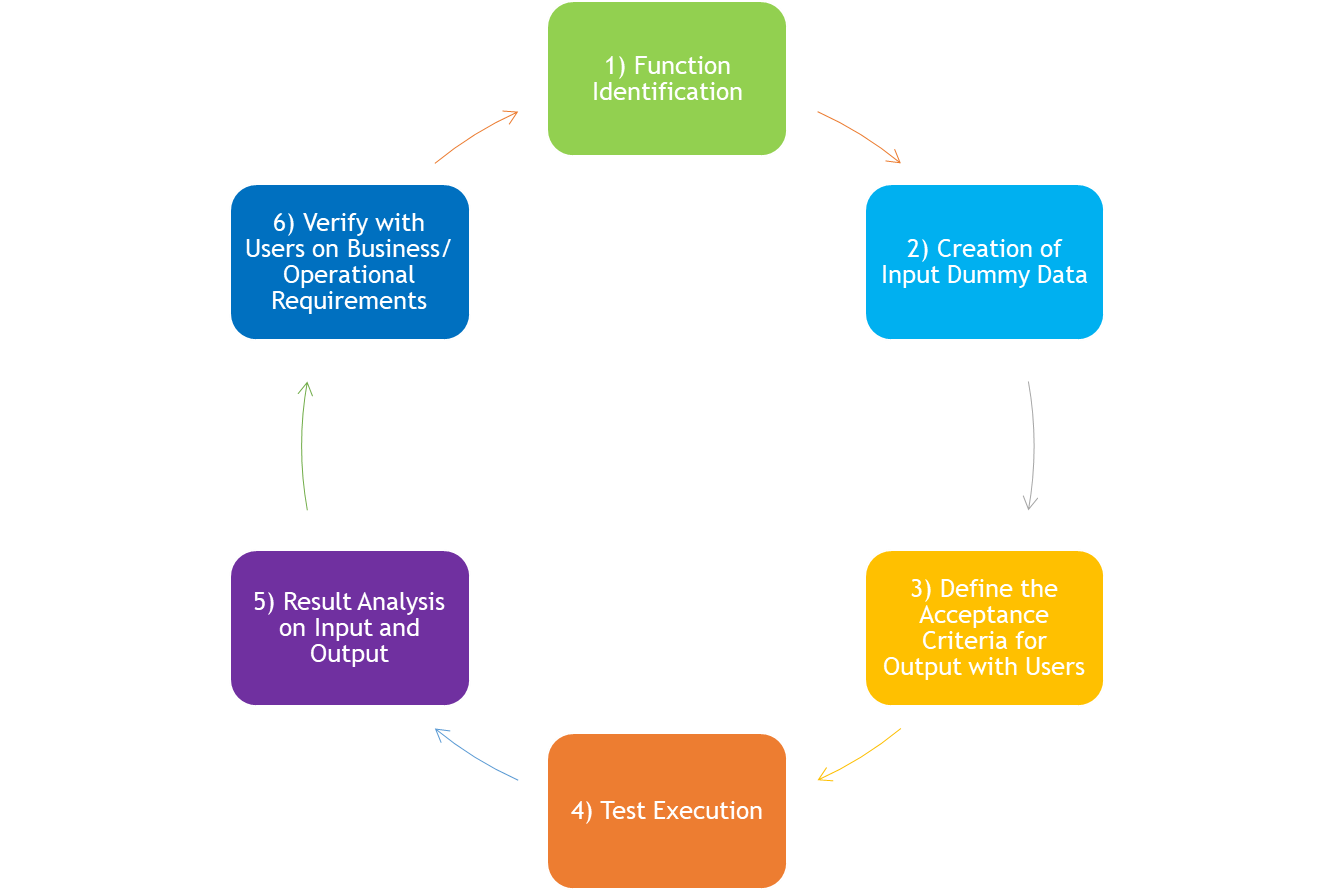

Example of Functional Test Tool: Selenium

Figure 2-11 Selenium: Functional Test Tool

Functional Testing Workflow

Figure 2-12 Functional Testing Workflow

2.7.3 Performance Testing

Performance testing is a non-functional testing technique performed to determine the system parameters in terms of responsiveness and stability under various workload. Performance testing measures the quality attributes of the system, such as scalability, reliability and resource usage.

Test Type: Blackbox testing

Objective: To identify the system behavior and performance on different user loads and identify the system breaking point and areas for improvement.This test shall be conducted by the software test team.

Tools Required: Load Generation such as JMeter, Application Performance Monitoring and System Performance Monitoring such as Dynatrace

Test Methodology:

1) Identify the key functions for key modules for testing

2) Create the dummy data based on the identified functionalities

3) Define the Quality Acceptance Criteria

4) Number of concurrent users (10% of total registered system users, 20% for critical system)

5) Response time by transaction complexity

- Simple (within 2 secs) – Login, Landing pages, Info display

- Medium (within 4 secs) – Search, Form submission

- Complex (within 12 secs) – Monthly report generation

6) Transaction failure rate

- Below 2% if no error details

- Below 1% if errors or exceptions are being captured

7) System resource utilization

8) Errors and exceptions

9) Test Execution

- Smoke Test (To verify if the test scripts, dummy data and system are ready for testing)

- Load Test (To identify the system behaviour and performance on different user loads, should run on 3 cycles with 5 days apart from each cycle for fine tuning or optimization on areas for improvement)

- Stress Test (To identify the system breaking point for fine tuning and capacity planning)

10) Result analysis

- Identify the number of concurrent users that can be supported by system with minimal failure rates and meet the response time criteria

- Identify the system resource utilization for configuration tweaking and capacity planning

- Identify the errors and exceptions for error handling and fine tuning

2.7.4 Security Testing

The objective of security testing is to minimize disruptions or damages by preventing and minimizing security incidents. It is also to ensure that the system’s security has complied with the security standard set by the government.

Example of Security Test Tools: Metasploit, Nmap

Areas for Testing:

- Penetration Testing - To emulate the real life situation of potential attackers attempting to break into ICT systems. The findings from the tests are used to overcome the vulnerabilities and weaknesses of the system.

- Disaster Recovery Testing - Test that is conducted to ensure that the organization is able to recover the data and restore its operations in the event of unexpected service disruption such as power failure, malicious attack and natural disaster.

Note: For detail cybersecurity approach can refer to MAMPU’s RAKKSSA document.

The above tests shall be conducted before the system goes live as part of quality management. Using DevOps practice, subsequent tests shall be automated as part of the CI/CD pipeline to reduce the involvement of a human being. Refer to section 2.12 for CI/CD workflow.

2.7.5 Software Testing Tools

The examples of tools that can be used to conduct or assist in software testing are as follow:

| Tools | Descriptions |

| Jenkins (integration) | An open source automation server that helps to automate the certain part of the software development process, with continuous integration and facilitating technical aspects of continuous delivery |

| Selenium (functional) | Open source browser automation or function test tool that can be used to record and playback tests to validate the functional aspects of the system |

| NeoLoad (performance) | Commercial load and stress testing tool to simulate virtual users and measure the performance of web and mobile applications |

| JMeter (performance) | An open source load and stress testing tool to simulate virtual users and provide extension for basic infrastructure monitoring |

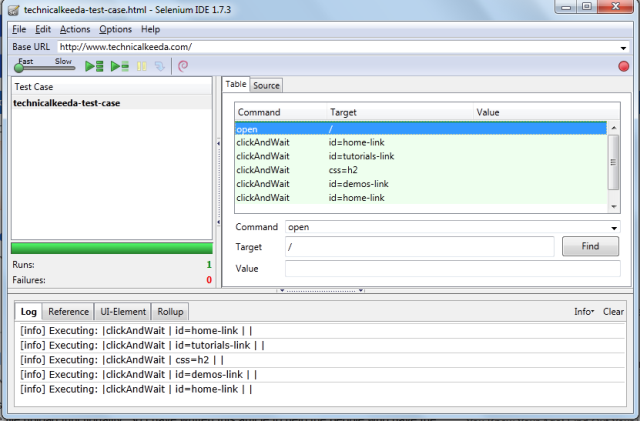

Selenium - Functional Test Tool:

Figure 2-13 Selenium Functional Test Tool

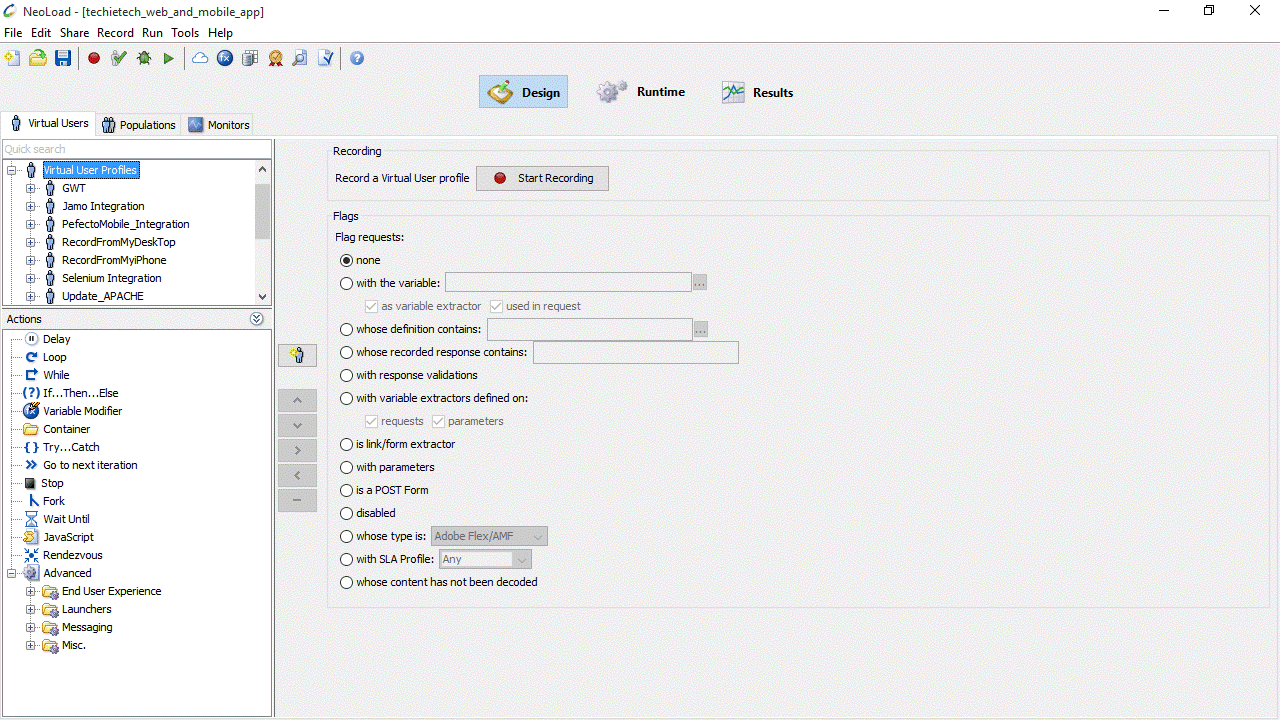

NeoLoad - Load Testing Tool:

Figure 2-14 NeoLoad : Load Testing Tool

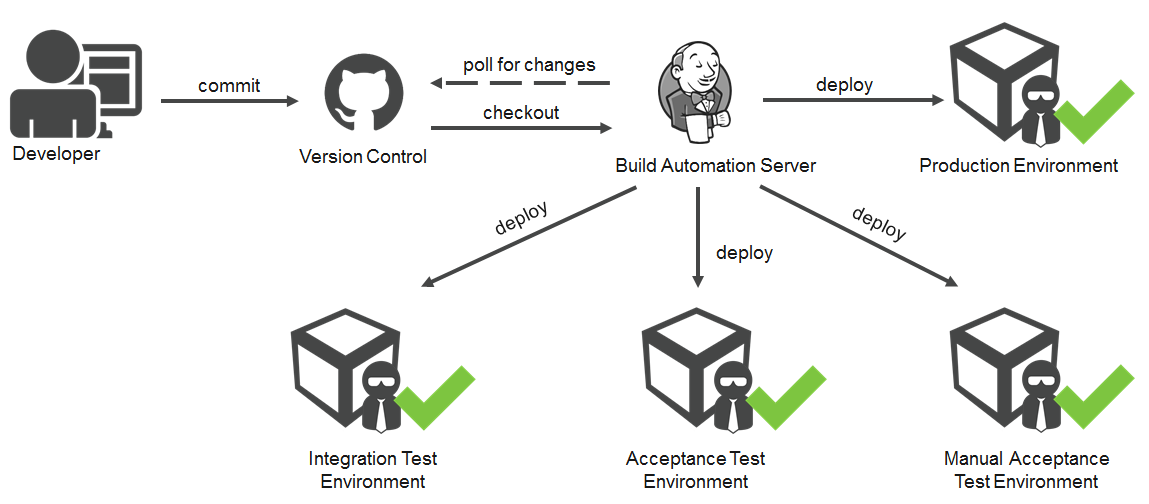

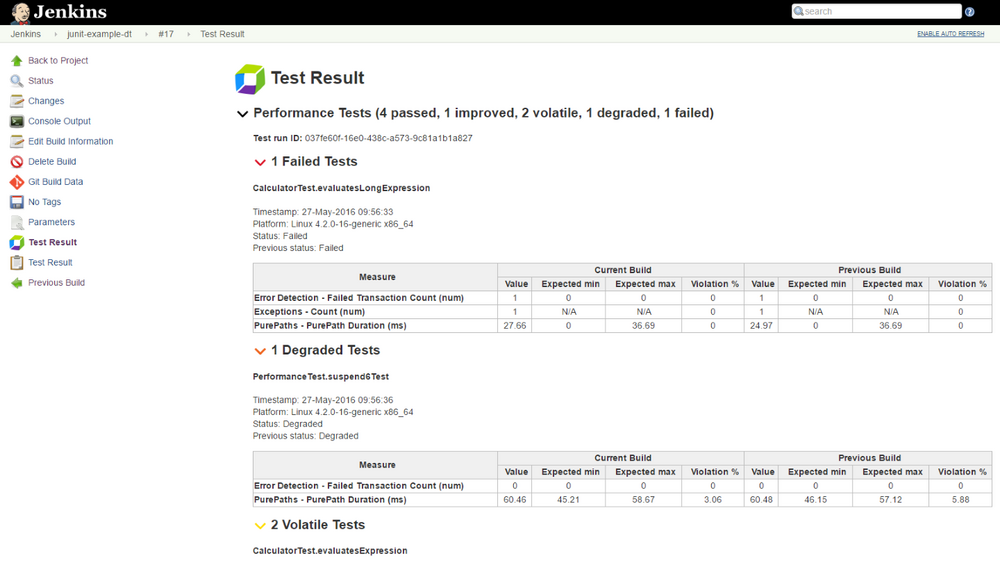

Before test automation can be done, test scripts and tools must be prepared to ensure readiness for unit or integration testing. Once the test scripts are ready, it can be integrated to CI tool such as Jenkins to automate the build and test. Refer to images below:

Figure 2-15 Jenkins Build and Test Automation Workflow

Figure 2-16 Jenkins Build and Test Automation

2.7.6 Test Deliverables

Test phase shall include the following deliverables:

- Test Plan

- Test Report